So I was listening to a recent episode of The Amp Hour; “An off-the-cuff radio show and podcast for electronics enthusiasts and professionals“, and Chris & Dave got onto the topic of custom logic implemented in an FPGA vs a hard or soft core processor (around 57 minutes into episode 98). This is a discussion very close to my current work and I’m probably in a very small minority so I figure I should speak up.

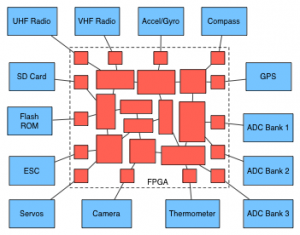

If you look closely at the avionics I’ve developed you’ll notice there is only an FPGA with no processor to handle the functionality of this device. There is an 8bit Atmel, but it’s merely a failsafe. So to make my position clear, everything in Asity is (will be) implemented in custom logic.

Chris & Dave didn’t go into great depth as it was just a side-note in their show so I’ll do my best to go through a few alternative architectures. I’ll also stick with Asity as an example given my lack of experience. I am just a Software Engineer after all.

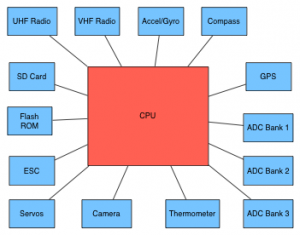

The goals here are to retrieve data from several peripheral devices including ADCs, accelerometers, gyroscopes among many others; do some processing to come up with intelligent control, and then output to various actuators such as servos. When designing such hardware a decision has to be made as to what processor(s) will be in the middle.

The first example is the one I’ll refer to as the traditional approach. This includes a single CPU that interfaces with all peripherals and actuators, much like you would find in your desktop PC from 5 years ago, or your phone today. This is the architecture used in the Ardupilot and many other avionics packages.

Modern processors are capable of executing billions of instructions per second. What can be done with those instruction depends on the instruction set used and the peripherals available to the CPU.

The major limitation with this architecture is that a single CPU core can only attend to one thing at a time. This means it can only service one interrupt, or perform one navigational calculation etc. at a time. In order to keep up with all the data gathering and processing required a designer must either be very talented or lucky. Either way, they still need to spend time developing a scheduling algorithm.

In a single core architecture with undeterministic inputs or algorithms it can be impossible to guarantee everything is serviced in time. The alternative that is often used is to make sure the CPU is significantly over powered, which requires extra money and power.

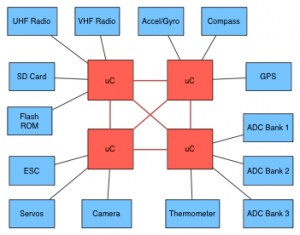

Next we have the multiple CPU core architecture. This could either be a multi-core CPU like a modern PC, or several independant CPUs/micro-controllers. A couple of avionics packs make use of the 8 core Parallax Propellor such as the AttoPilot.

Next we have the multiple CPU core architecture. This could either be a multi-core CPU like a modern PC, or several independant CPUs/micro-controllers. A couple of avionics packs make use of the 8 core Parallax Propellor such as the AttoPilot.

This architecture allows tasks and interfaces to be serviced in smaller, independent groups which simplifies scheduling logic and allows a reduction in clock speed. It also introduces the extra complexity of managing the communication between each of the cores. While this improves the situation over the single core architecture, each core is still fundamentally limited by a single execution bottle neck.

The final architecture I’ll discuss is complete custom logic. This is the architecture I’ve used in Asity and the one that makes the most sense to me as a computer scientist. I’ve chosen to implement this in a single FPGA, but this architecture can be spread over many devices without significantly altering the software.

The final architecture I’ll discuss is complete custom logic. This is the architecture I’ve used in Asity and the one that makes the most sense to me as a computer scientist. I’ve chosen to implement this in a single FPGA, but this architecture can be spread over many devices without significantly altering the software.

In this architecture, each peripheral device is serviced by dedicated silicon, it never does anything else. This allows great flexibility in interrupt timing, samples can be taken at the natural or maximum rate of the device without increasing the burden on computational resources. Internal logic modules are also dedicated to a specific task such as navigation and attitude calculations without ever being interrupted by an unrelated task.

In both CPU based architectures a significant portion of development effort is required to schedule tasks and manage communication between them. In fact a large portion of Computer Science research is spent on scheduling algorithms for multitasking on linear sequential processors. Truth be told, sequential processors are a very awkward way of making decisions especially if the decisions aren’t sequential in nature. They have proven to be useful in extensible software systems like a desktop PC, as long as there is an operating system kernel to manage things.

Any software designer worth their salt is capable of a modular design which will quite naturally map to custom logic blocks. These blocks can be physically routed within an FPGA fabric allowing data to flow along distinct processing paths which don’t have to deal with unrelated code.

The down side to custom logic is of course time and money. FPGAs are still quite expensive, two orders of magnitude more expensive than a processor suitable for the same task. There also aren’t as many code libraries available for synthesis as there is for sequential execution so a lot has to be written from scratch.

A small price to pay for deterministic behaviour.

With such system using a processor that need real time deterministic guaranteed performance for tasks, you’d use a real-time O/S (RTOS), that’s precisely what they were developed for. Plenty available for almost every core out there. Little experience or fuss needed in most cases, it takes care of all the determinism for you without having to learn much if anything new. Just use your existing familiar C, with a few small hooks into the RTOS.

An RTOS is a required solution for a CPU based architecture if the system is too large/complex to handle, but it’s still limited by the single point of execution.

I’ve used an RTOS on small systems. They aren’t just for complex system. Look at the Rabbit processors for example, they have RTOS capability really easily for any size project.

Yes, it’s limited by single point of execution, but it can meet usually your guaranteed deterministic needs.

The FPGA solution is nice, but possibly overly complex and not always the best solution. Also requires a different skill/toolset if you are used to micros.

“Usually” 😛

The issue I have with an RTOS or even higher level languages is that they over simplify things. Granted, that’s their point, but all the timing issues still exist and you either deal with them in an abstract sense or worse: don’t think about them at all.

I guess I’m arguing from an idealistic perspective. The real world is obviously not going to move from micros any time soon.

It’s just another design balancing act.

You need to balance performance requirements with development vs hardware costs (balance determined by volume of production).

Most scenarios don’t require extreme “real-time” processing, especially when talking to the outside world.

Is there any real-world difference in updating an RC servo position 20 vs 1,000,000 times a second?

(I am just a Software Engineer too, and have moved a project from ATMega to FPGA. But the performance I am able to get from a standard cheap micro was pretty impressive.)

I agree that it’s a balance. As has been proven by the market, FPGAs don’t make it into nearly as many designs as micros. I’m lucky that I’m building this in an academic context and don’t have a time or production budget to meet.

Real-time is more about determinism than speed. I agree there isn’t much point updating servos faster then 50Hz, but I’d need them to ‘always’ update at 50Hz, not 48, not 55. This is hard to guarantee when your processor is being shared by other tasks like intermittent data transfers or compression algorithms. Servos are a forgiving example but there are many others.